Automation at Your Core

We often hear that automating repetitive tasks improves productivity. But how do we decide when automation is truly worth the effort?

1 At OKX, while managing end-to-end testing servers, I frequently needed to SSH into servers to diagnose issues and retrieve relevant logs (to my local) for further investigations. This was tedious but manageable, thanks to my terminal’s auto-completion. However, as we expanded to four servers (two in Hong Kong, two in Singapore), keeping track of server IPs, usernames and passwords for the SSH became a hassle. That’s when I built a small utility to simplify the process.

With this tool, logging into a Hong Kong server (without specifying password) was simply as:

$ sshe login hk

And pulling a certain file from a server was just:

$ sshe pull hk path/to/log

This significantly improved efficiency and freed up my time for more important tasks.

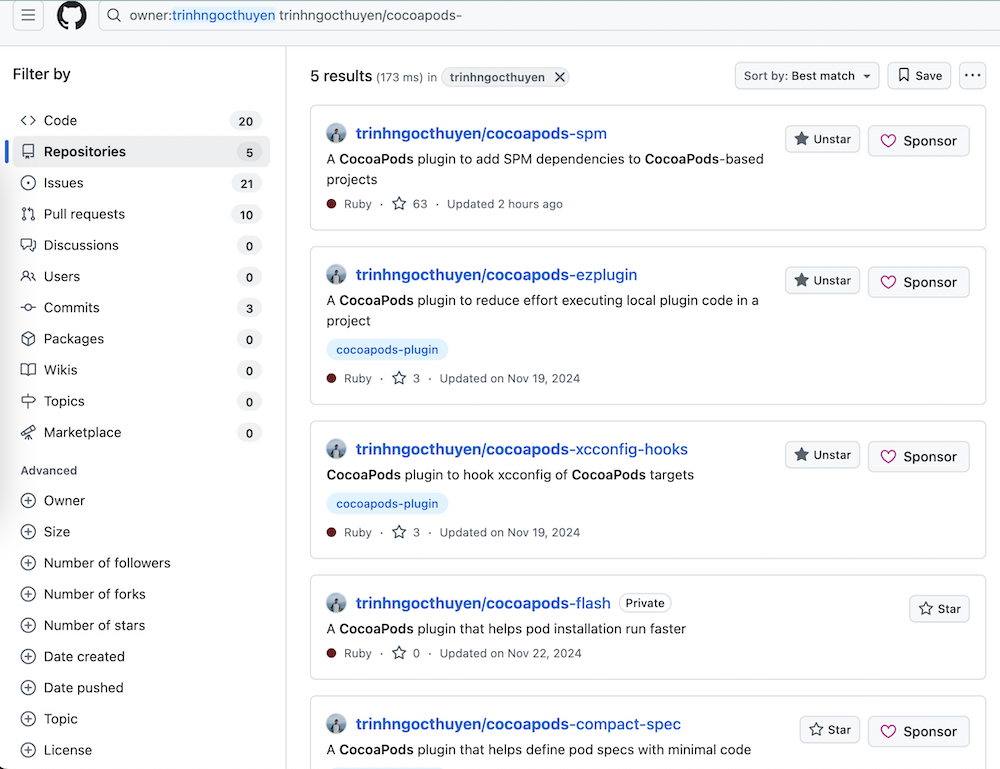

2 Beyond corporate work, I also maintain several open-source projects on Github, including some Python packages and CocoaPods plugins. From the start, I used Github actions to deploy a new release. All I had to do is to trigger a workflow under the Github actions dashboard. This worked well when managing just one or two projects. However, as the number of repos grew, synchronizing changes across them became a maintenance burden. To reduce this overhead, I extracted the deployment workflow into a shared repo: gh-actions. However, the need for maintenance didn’t end there.

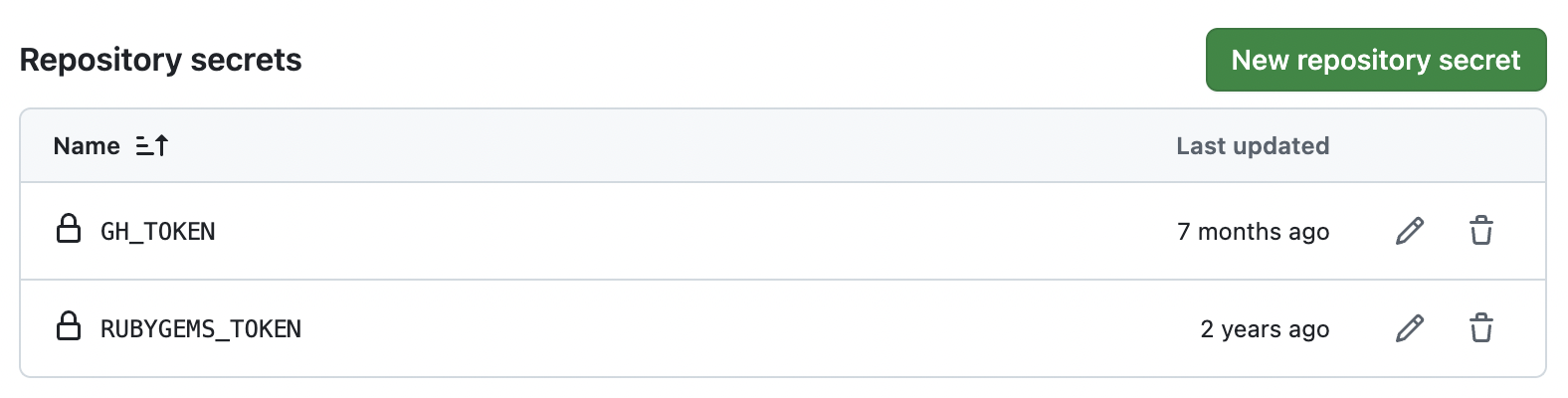

Technically, deploying a CocoaPods plugin (which is a Ruby gem) requires 2 tokens:

- A RubyGems.org token to publish the gem.

- A Github deployment token to create a release and bump the version.

Managing these tokens manually seemed fine… until the token expired. I had to regenerate a new token and update the corresponding secrets across all repos above. This manual process was tedious, requiring navigation through different Github settings (Repo → Settings → Secrets and variables → Actions → make update). Once, my token expired while I was maintaining two active repos (cocoapods-spm and e2e-mobile). I updated the token only for these two, assuming that I wouldn’t need it for others (because I won’t have any updates for them). This was such a mistake 😅! Later, when I had to update another plugin, the deployment went broken which was frustrating and costed me preliminary investigations.

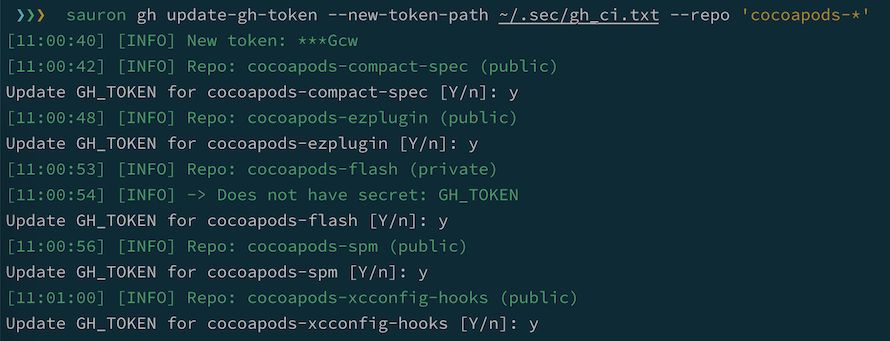

I then wrote a CLI tool called sauron (the name was from LOTR) to help with some admin tasks. Thanks to this tool, I can update tokens across multiple repos matching a pattern. This can be done at hand with Github CLI or with Github APIs.

$ sauron gh update-token --pattern 'cocoapods-*'

Of course setting a long-lived token (ex. without expiration) could have avoided this issue. But automation offers an effortless setup for new repos.

3 Automation isn’t just about scripting repetitive tasks - it’s also about designing workflows and orchestrating them together. Back to my deployment story, although the process was automated, it still required a manual trigger. After fixing bugs in cocoapods-spm, for example, I had to manually run the deploy workflow to release a new version. This is where human mistakes jump in… coz I sometimes forgot 😅.

To prevent it, I introduced a weekly auto-deployment workflow:

- Milestone assignment: For each project, we have an active milestone (ex. 1.0.2) that indicates the yet-to-be-released version. When a PR gets merged, it is assigned to this milestone.

- Scheduled deployment: A cron job runs every Sunday, checking if the active milestone has any associated PRs. If so, it triggers a deployment.

- Milestone management: After a release, the workflow closes the current milestone and creates a new milestone for the next version.

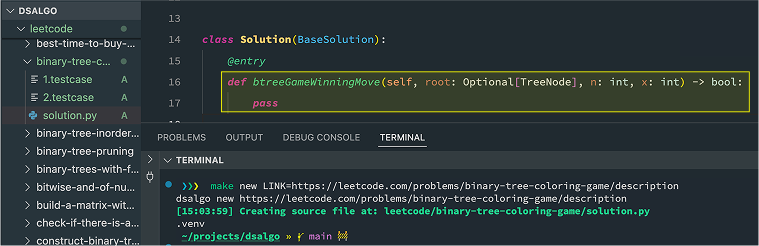

4 Another automation win came from solving LeetCode problems. I prefer coding locally for better debugging experiences. Initially, each solution file was isolated. I needed to write a bunch of boilerplate code to test against given test cases. The unrelated code was even more for graph/tree problems.

class Solution:

def run(self, x: int) -> int:

pass # implementation goes here

def compare(actual, expected):

assert actual == expected, f'Expected: {expected}, actual: {actual}'

solution = Solution()

compare(solution.run(1), 10)

compare(solution.run(2), 20)

I should have focused on writing the solution instead. So, I spent some time simplifying the setup.

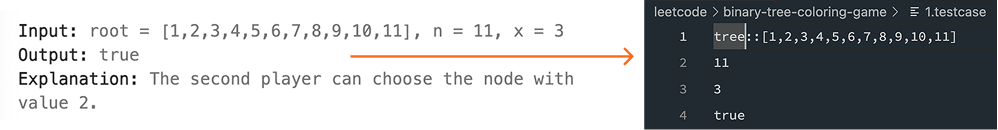

- Running

make new LINK=<web-link>automatically generates:- A solution file with the prefilled code (function signature as in LeetCode code panel)

- Test case files parsed from the problem description.

Though the parsing logic is heuristic, it works most of the time, giving me a great sense of productivity.

- A solution file with the prefilled code (function signature as in LeetCode code panel)

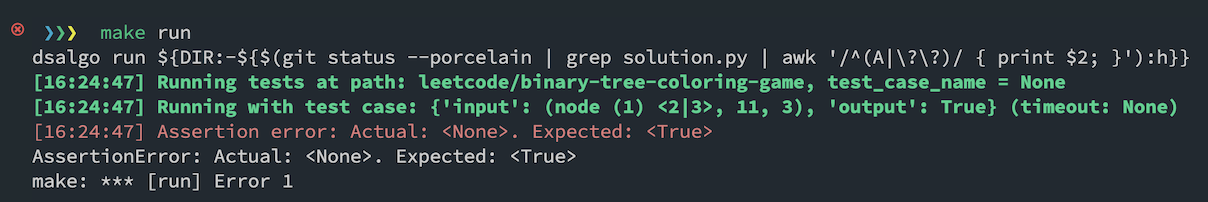

- Running

make runexecutes the solution against the test cases.

5 Returning to our original question: When is the right time to automate? The answer varies based on effort versus benefit. At the end of the day, it’s the ROI (return on investment) that drives the actions.

For me, since I already work closely with automated processes, the time and effort spent setting up a certain automation task might be relatively low compared to an iOS engineer who doesn’t regularly deal with it.

A good rule of thumb is to consider repetition:

- If a manual task happens several times a week, automation is likely worth it.

- If a task occurs rarely for one, say once every 2 months, but is distributed among a team (ex. each takes turn to do it weekly), automating it might still provide significant savings as a whole.

Of course, the choice also depends on other factors such as capacity and priority.

…

Lastly, automation doesn’t always mean large-scale solutions. Small and incremental improvements matter too. Start with micro-automations, make it a habit, and it eventually pays off.

Like what you’re reading? Buy me a coffee and keep me going!

Subscribe to this substack

to stay updated with the latest content